Generate image metadata. Locally.

Create rich, structured metadata from images using local vision models — without uploads, subscriptions, or data leaving your Mac.

Requires Apple Silicon Mac with macOS 26

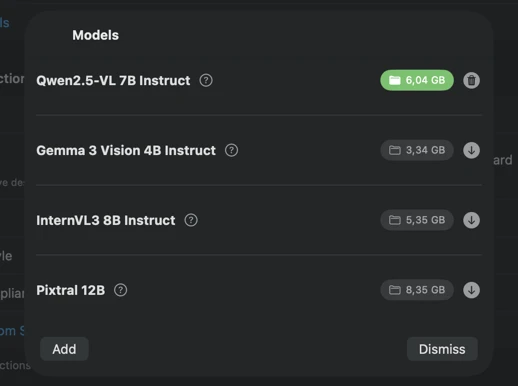

Choose a model — or bring your own

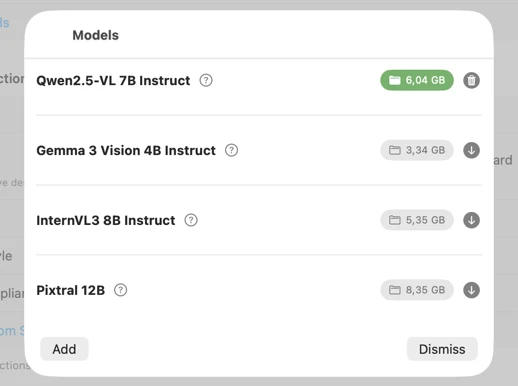

Download preconfigured vision models in-app, or link your own GGUF model and projector files. Choose the model that best fits your images and quality requirements, then fine-tune generation with adjustable parameters to get consistent, repeatable results. All processing runs locally on Apple Silicon (M1 or later), using your Mac's performance instead of a cloud service.

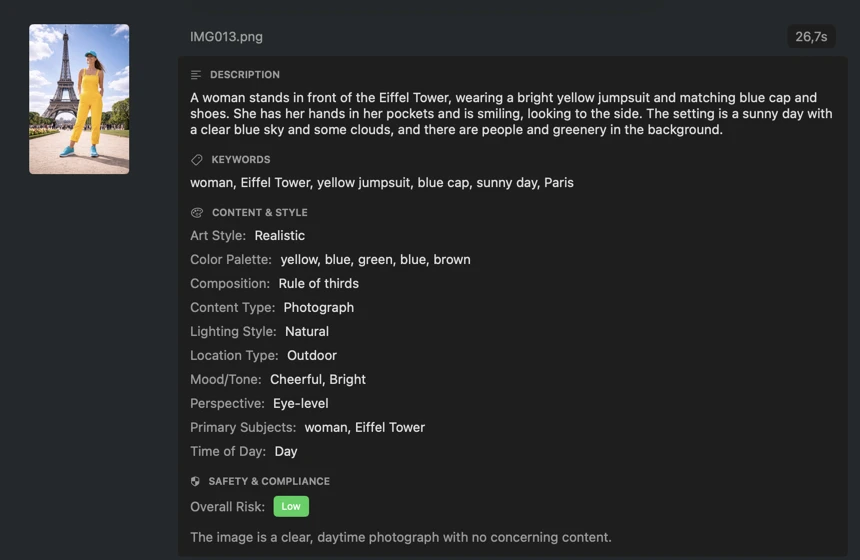

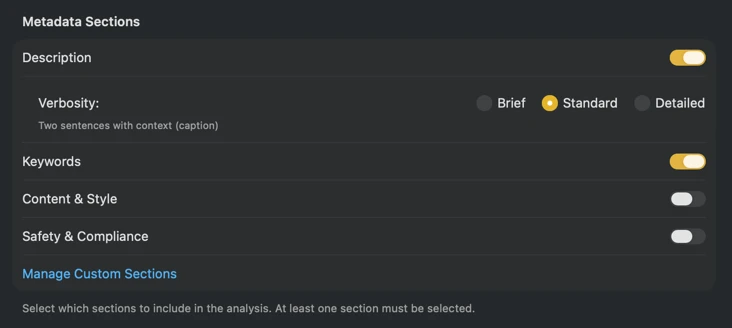

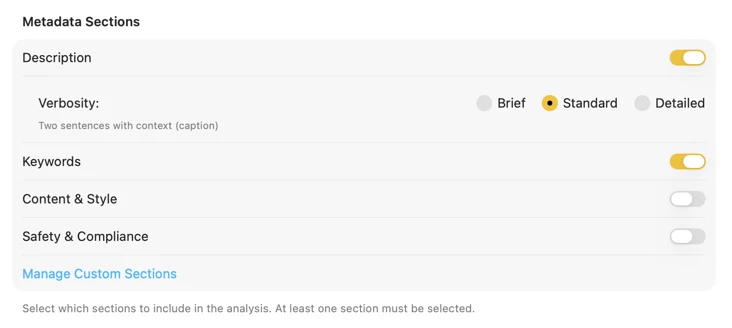

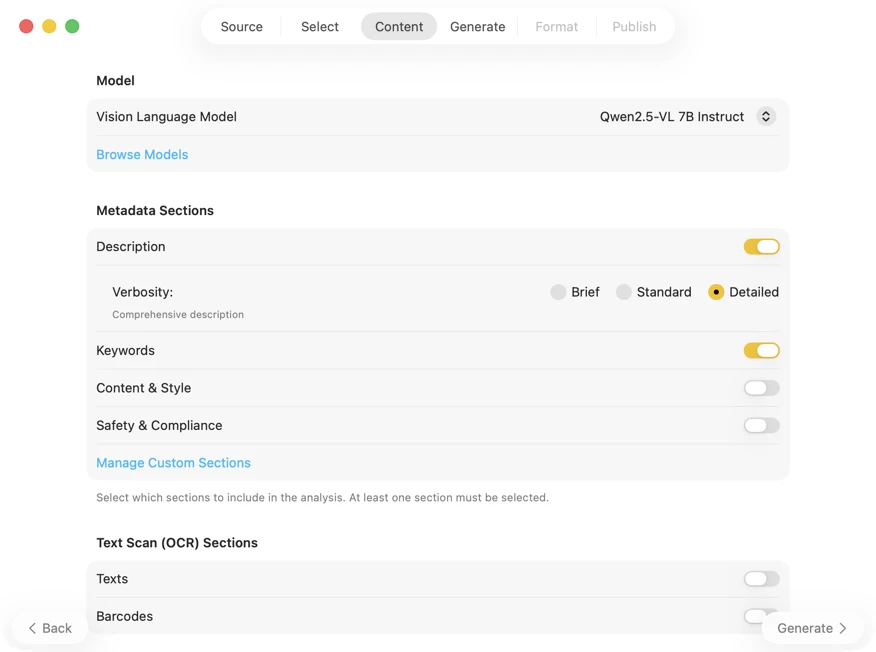

Define your own metadata schema

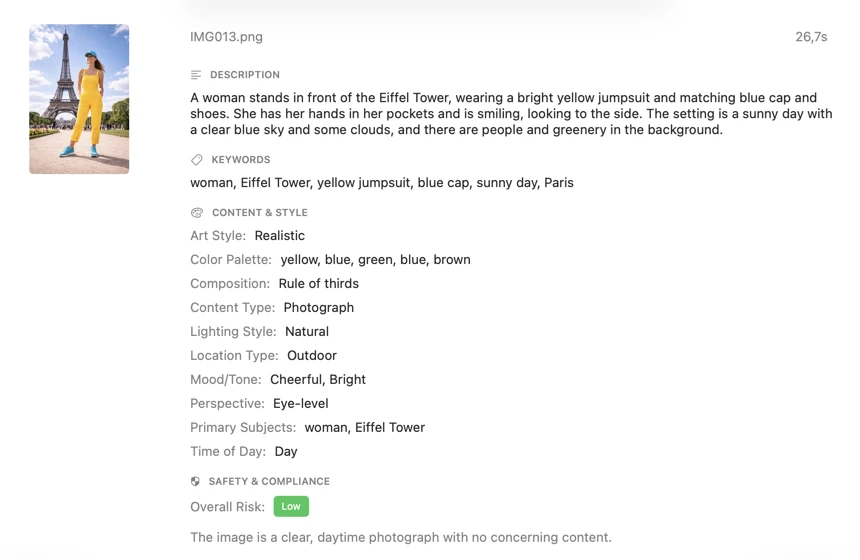

Generate only the metadata you actually need. Enable built-in sections such as Description, Keywords, Content & Style, and Safety & Compliance — then extend them with custom sections and fields tailored to your workflow. For each field, choose a data type (Boolean, Text, or List of Texts) and write a prompt that instructs the model exactly what to extract. The result is structured metadata that matches your conventions and stays consistent across large batches.

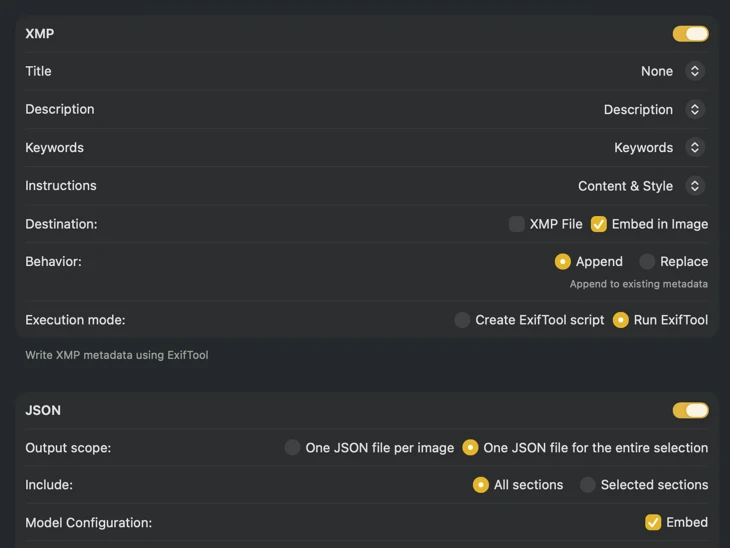

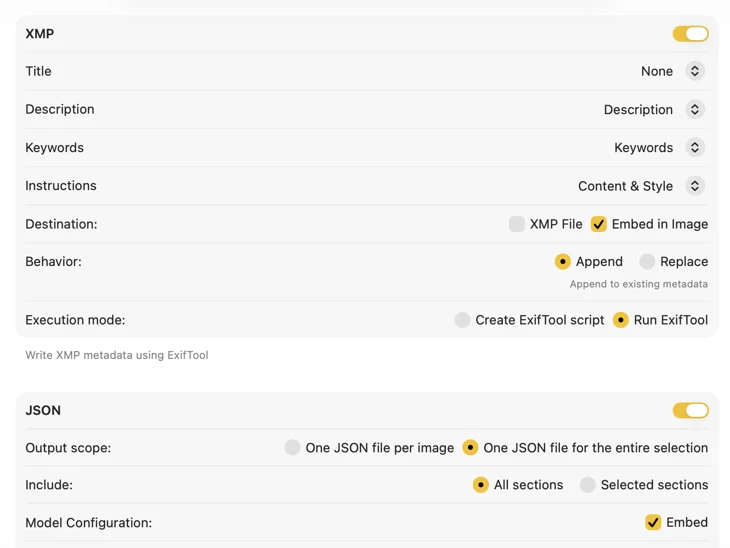

Export metadata where you need it

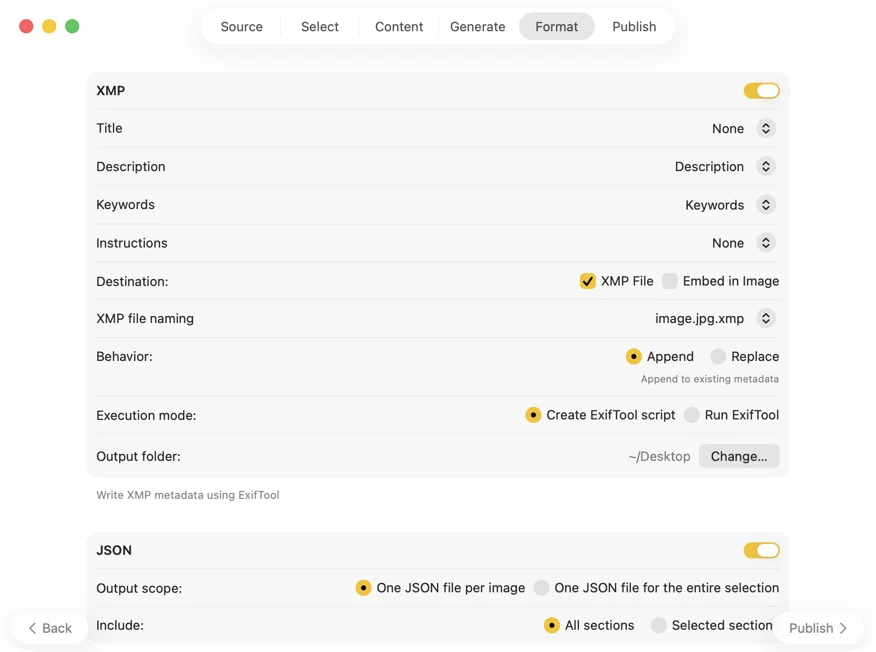

Publish metadata in the format that best fits your pipeline. For XMP sidecars and embedded metadata, VisionTagger integrates with ExifTool — an industry-standard, widely trusted utility. Your metadata will appear in apps like Adobe Lightroom, Bridge, Capture One, Photo Mechanic, darktable, and any other software that reads XMP. Write back to your Photos Library, export JSON or TXT per image, or generate a single file for an entire run. Add Finder tags for fast organization in macOS. Select multiple outputs at once and configure them together — so one generation pass can feed every destination you use.

Use cases

- •

Anyone who wants to find that one image later — by generating searchable descriptions, keywords, and tags for your library.

- •

Photographers managing large shoots — speed up culling, cataloging, delivery, and archive workflows with consistent metadata.

- •

Designers organizing asset libraries — make mood, style, subject matter, and usage easier to search across projects.

- •

Researchers and archivists tagging collections — create structured, consistent metadata for datasets, records, and long-term preservation.

System requirements

- •

macOS Tahoe 26.0 or later

- •

Apple Silicon required (M1 or later)

- •

For optimal performance with larger models 16GB RAM or more is recommended

- •

Model storage: plan for ~4–8 GB per model (downloaded locally)

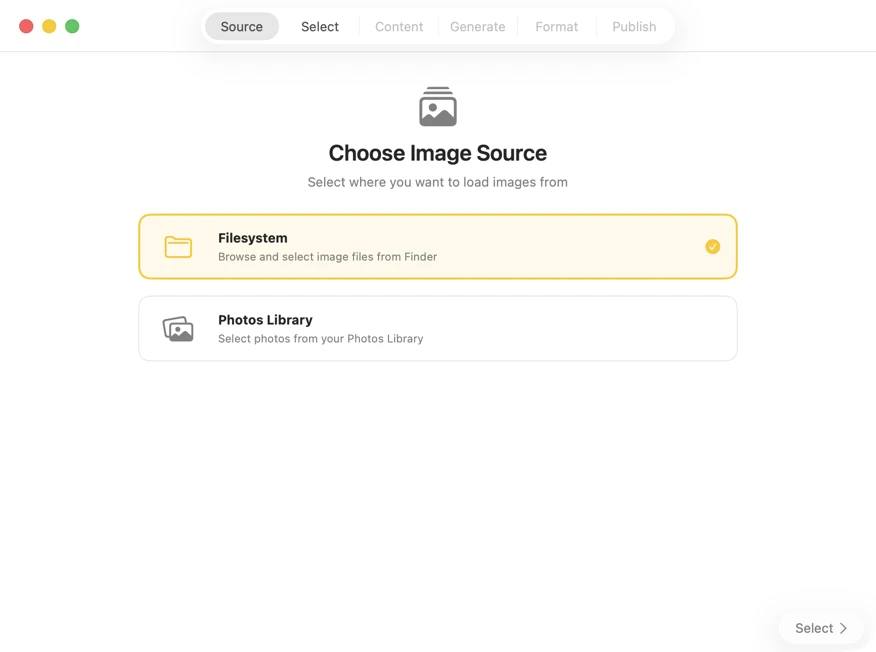

From images to metadata — in six steps

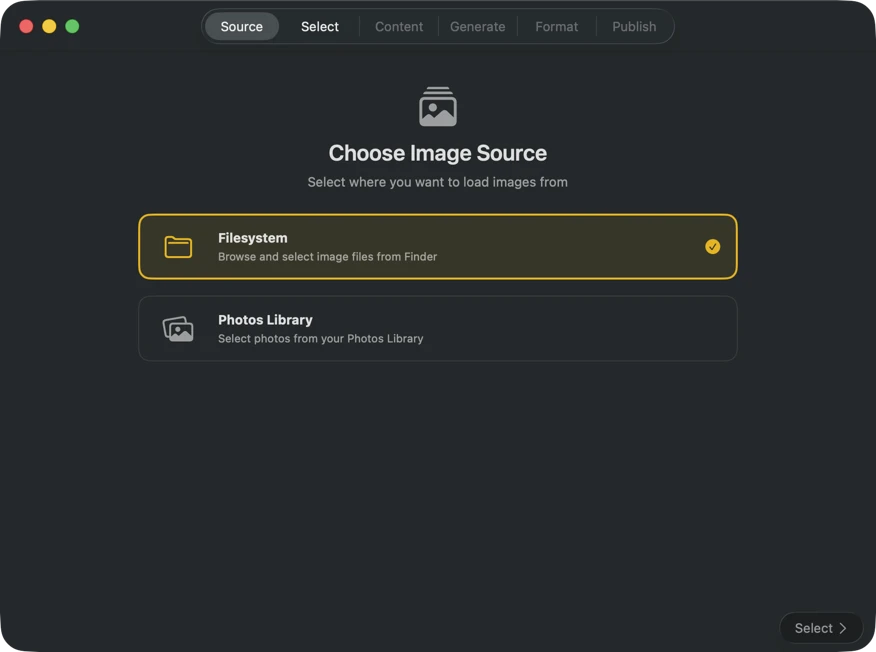

Choose where your images are stored — folders on your Mac or your Photos Library.

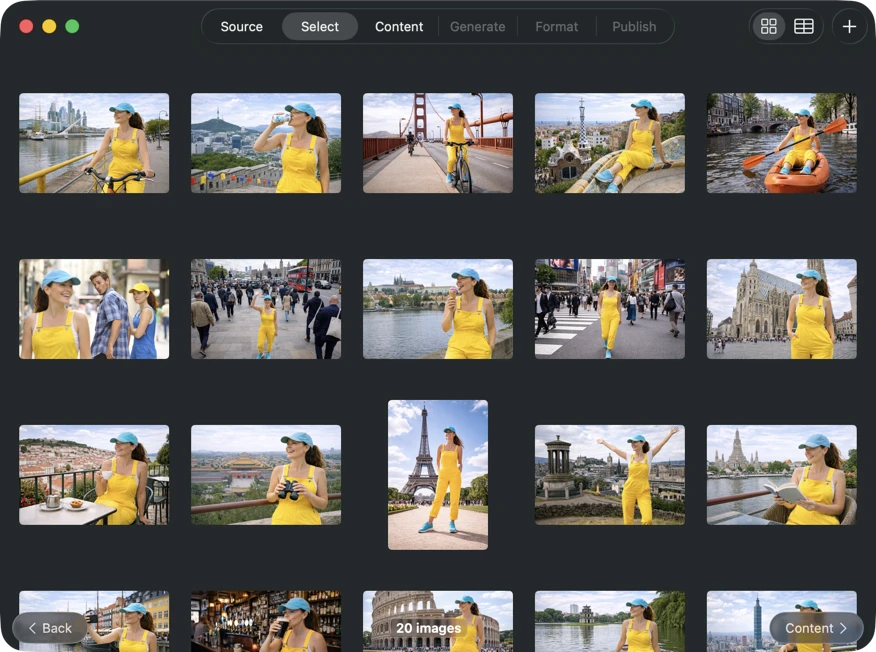

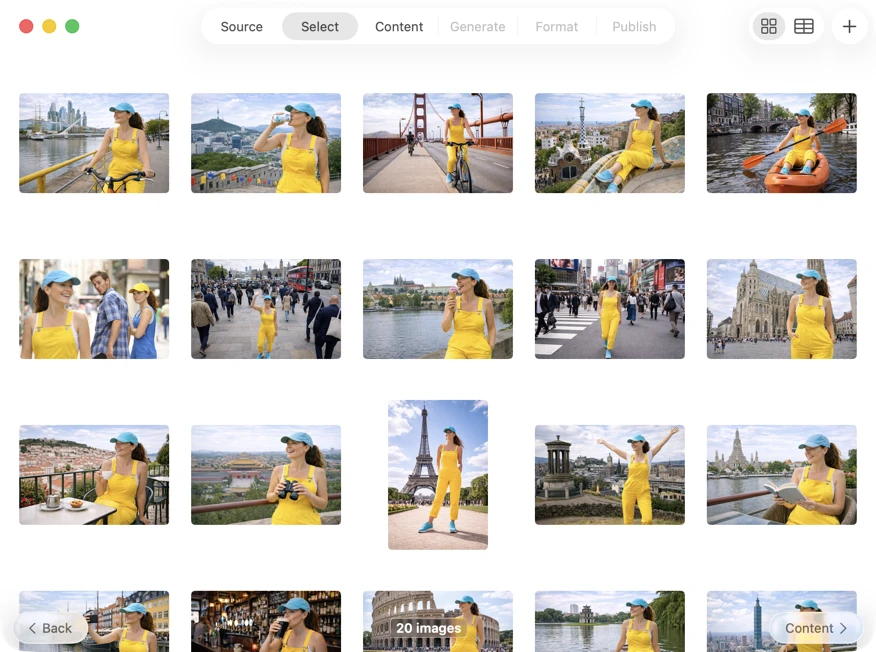

Pick exactly what you want to process. Browse your selection in a grid or table view, add more images via the add button, or drag and drop files into the app to build a batch.

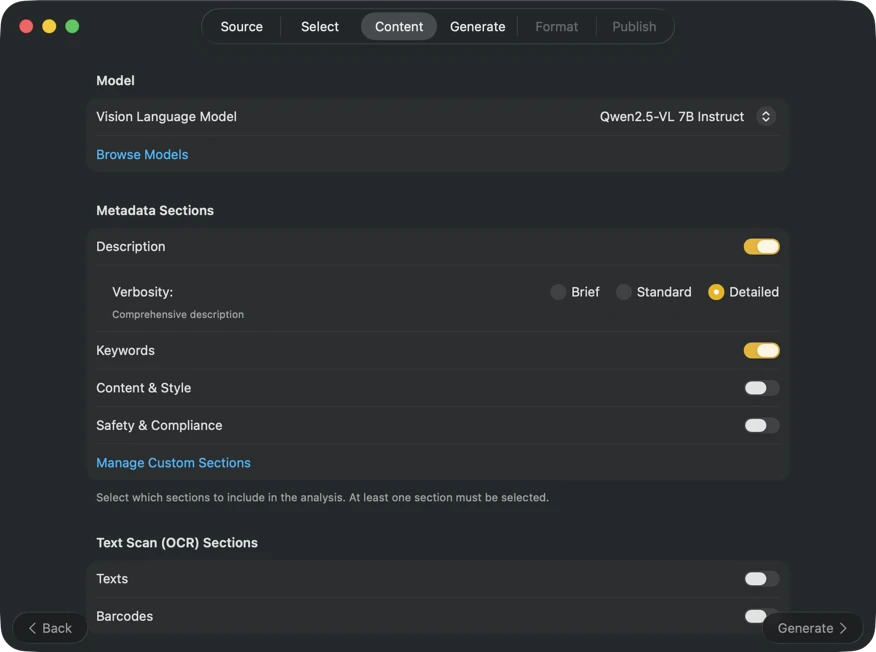

Choose how metadata is generated. Download one of the preconfigured vision models, or link your own GGUF model + projector files. Then select the metadata sections you want (Description, Keywords, Content & Style, Safety & Compliance) and optionally add custom sections with your own fields, data types, and prompts for fully tailored output.

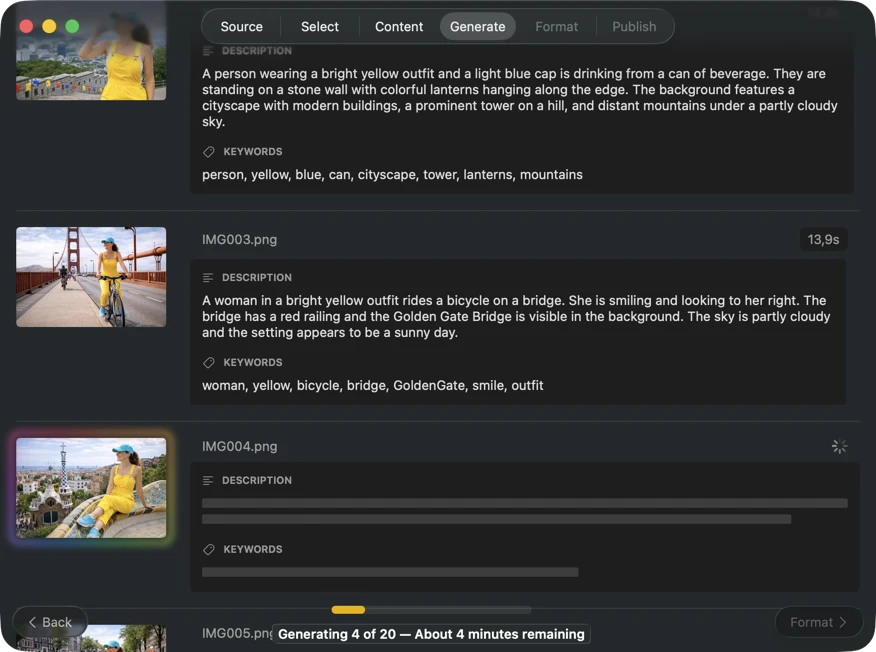

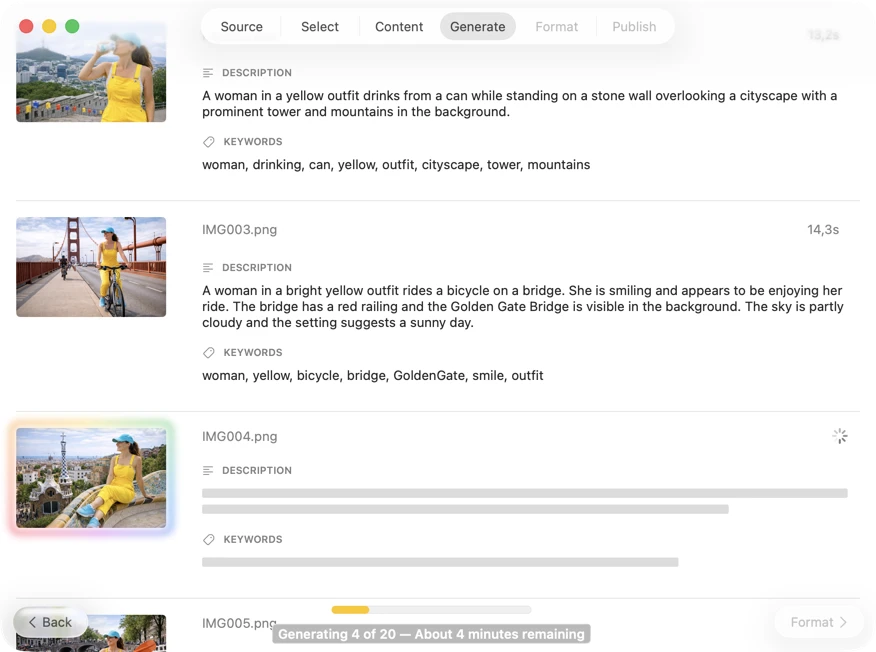

Watch results appear in real time. VisionTagger processes images locally and streams generated metadata into a scrolling list as soon as each item is ready, so you can review and edit outputs while the batch continues.

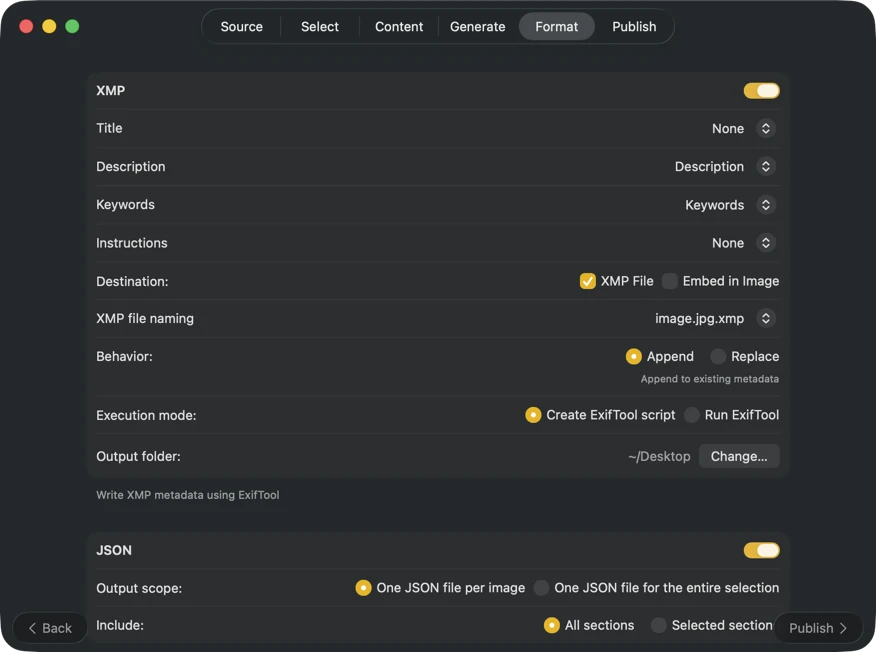

Decide where the metadata should go. Export per-image or per-batch files (JSON or TXT), apply Finder tags, and configure multiple outputs at once. For XMP sidecars and embedded metadata, VisionTagger uses ExifTool (to be installed separately) to ensure reliable, widely compatible results.

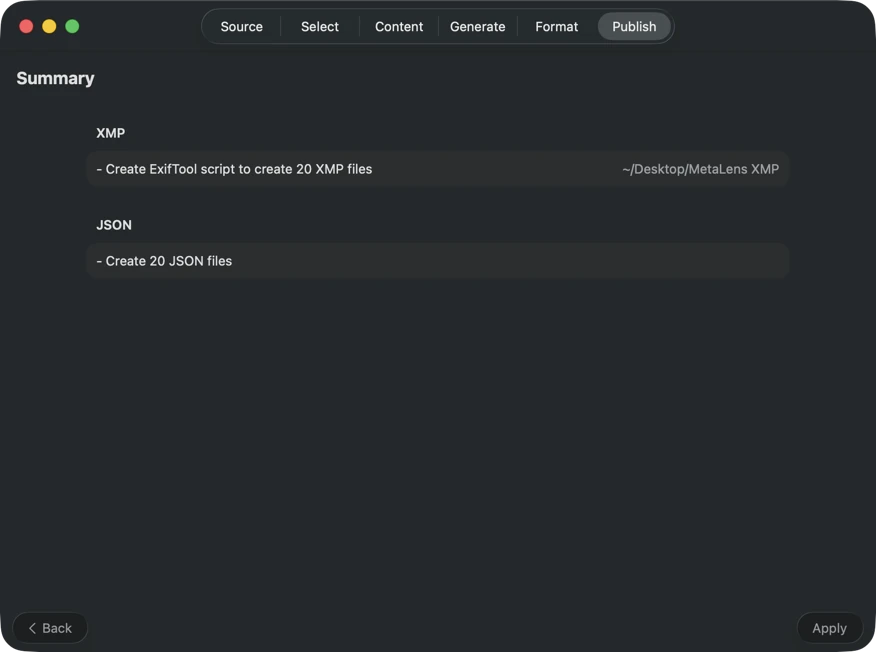

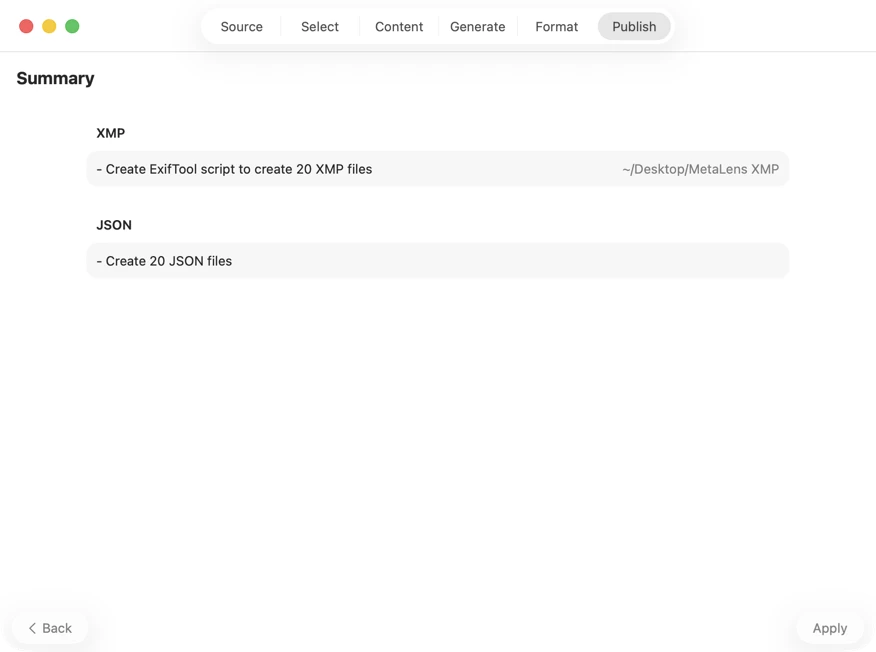

Confirm before anything is written. Review a clear summary of all actions that will be executed, including warnings when existing files or metadata may be overwritten — then publish to apply your selected outputs with confidence.

One-Time Purchase

VAT included (except US & CA)

Secure payment via FastSpring